Neuromorphic Computing: When Silicon Learns to Think Like a Brain

Discover how neuromorphic computing revolutionizes AI with brain-like silicon chips.What is Neuromorphic Computing?

At its core, neuromorphic computing aims to mimic the structure and function of biological neural networks in hardware. It's like trying to recreate a jazz band using nuts, bolts, and silicon - ambitious, a little crazy, but potentially revolutionary.

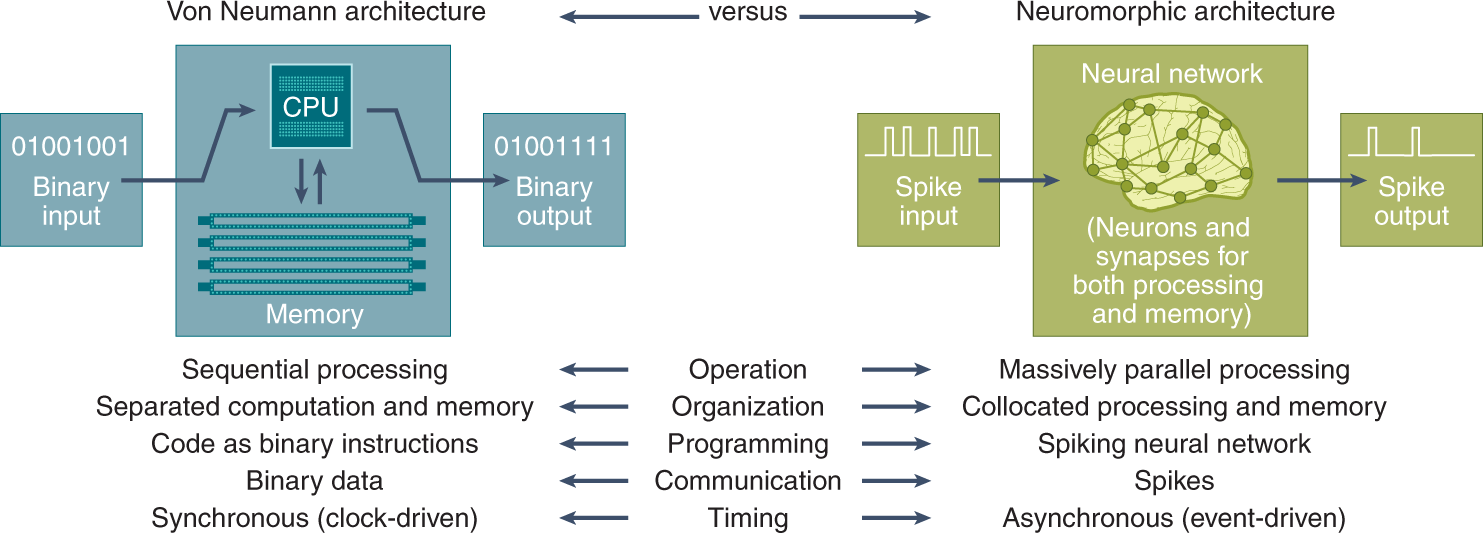

Traditional computers operate on the von Neumann architecture, with separate units for processing and memory. This design has served us well, powering everything from smartphones to supercomputers. But when it comes to tasks like pattern recognition or adapting to new situations, our brains run circles around even the most advanced silicon chips.

Neuromorphic systems, on the other hand, blur the lines between processing and memory. They're composed of artificial neurons and synapses that can compute and store information simultaneously, much like our own gray matter. If traditional computers are like well-organized filing cabinets, neuromorphic chips are more like a bustling metropolis of interconnected, chatty neurons.

The Brain as a Model: Nature's Supercomputer

So why look to the brain for inspiration? Well, our noggins are marvels of engineering, capable of incredible feats while sipping on a mere 20 watts of power. That's less energy than it takes to run a typical light bulb! Meanwhile, training a large language model like GPT-3 can consume as much electricity as a small town.

The brain achieves this efficiency through massive parallelism, with billions of neurons firing simultaneously. It's also incredibly plastic, constantly rewiring itself to learn and adapt. And perhaps most impressively, it's remarkably fault-tolerant. Lose a few neurons here and there, and your brain keeps on ticking.

Neuromorphic chips aim to replicate these key features. Imagine a computer that could learn on the fly, adapt to new situations, and keep working even if parts of it fail. It's like giving your laptop a tiny, silicon brain transplant!

Advantages of Neuromorphic Computing

The potential benefits of neuromorphic systems are enough to make any tech enthusiast drool. First and foremost is energy efficiency. In my experience visiting neuromorphic computing research facilities, I've seen chips that consume mere milliwatts of power while performing complex cognitive tasks. It's like watching a hummingbird outmaneuver a jumbo jet.

But the advantages don't stop there. Neuromorphic systems excel at handling noisy, uncertain data - the kind of messy information we encounter in the real world. They can learn and adapt in real-time, making them ideal for applications like robotics or autonomous vehicles. It's the difference between a car that needs to be reprogrammed for every new road condition and one that can learn to navigate any terrain on the fly.

The Current State of Play

While neuromorphic computing might sound like science fiction, it's very much a reality. Tech giants and research institutions are pouring resources into developing brain-inspired chips. IBM's TrueNorth chip, for instance, packs a million neurons and 256 million synapses into a stamp-sized piece of silicon. Intel's Loihi chip, named after an underwater volcano (because why not?), can solve certain optimization problems 1000 times faster than conventional processors.

From what I've observed in the lab, these chips are already showing promise in areas like computer vision, natural language processing, and robotic control. But we're still in the early days - think of it as the "Wright brothers' first flight" moment of neuromorphic computing.

Potential Applications: From Sci-Fi to Reality

The potential applications of neuromorphic computing read like a sci-fi writer's wish list. Imagine smartphones that can understand and respond to natural language as effortlessly as a human. Or autonomous drones that can navigate complex environments with the agility of a hummingbird. Brain-computer interfaces that could restore sight to the blind or movement to the paralyzed are also on the horizon.

In my more fanciful moments, I envision a future where neuromorphic chips power artificial general intelligence - machines that can think, reason, and perhaps even feel like humans. But let's not get ahead of ourselves - we're still trying to teach these silicon brains to walk before they can run.

Challenges: It's Not All Smooth Sailing

Of course, creating brain-like computers is no easy feat. We're essentially trying to reverse-engineer the most complex object in the known universe using materials that are fundamentally different from biological tissue. It's like trying to recreate a gourmet meal using only Lego bricks - possible in theory, but fiendishly difficult in practice.

One major hurdle is scaling. While we can create chips with millions of neurons, the human brain boasts billions. Manufacturing processes, programming paradigms, and our understanding of neuroscience all need to advance significantly before we can approach the complexity of biological brains.

There's also the challenge of bridging the gap between neuroscience and engineering. As someone who straddles both worlds, I can tell you it sometimes feels like trying to translate between Klingon and Elvish!

Neuromorphic vs. Quantum: The Compute Showdown

No discussion of cutting-edge computing would be complete without mentioning quantum computers, the other "next big thing" in the world of bits and bytes. While both neuromorphic and quantum computing promise to revolutionize computation, they're tackling different problems.

Quantum computers excel at solving certain specialized problems, like factoring large numbers or simulating quantum systems. Neuromorphic computers, on the other hand, are better suited for tasks that come naturally to biological brains, like pattern recognition or decision-making in uncertain environments.

In the future, we might see hybrid systems that combine the strengths of traditional, quantum, and neuromorphic computing. It's like assembling the Avengers of the computing world!

Ethical Implications: With Great Power...

As we venture into the realm of brain-like machines, we inevitably encounter thorny ethical questions. If we create computers that can think like humans, do they deserve rights? Could they one day become conscious? And what happens to human jobs as these systems become more capable?

These are questions that keep ethicists (and sci-fi authors) up at night. While we're still a long way from truly sentient machines, it's crucial that we grapple with these issues now, before neuromorphic AI becomes ubiquitous.

The Future is (Artificially) Neural

As we stand on the brink of this neuromorphic revolution, I can't help but feel a mix of excitement and trepidation. The potential to create machines that think, learn, and adapt like biological brains is truly mind-boggling. From revolutionizing AI to unlocking new frontiers in brain-computer interfaces, neuromorphic computing could reshape our world in ways we can scarcely imagine.

But as Uncle Ben once said, "With great power comes great responsibility." As we continue to push the boundaries of what's possible with silicon and synapses, we must also carefully consider the implications of our creations.

For those of you itching to get involved in this brave new world, fields like computer engineering, neuroscience, and machine learning are great places to start. There are also numerous open-source projects and online resources for hobbyists looking to dip their toes into neuromorphic waters.

In conclusion, neuromorphic computing represents a paradigm shift in how we approach artificial intelligence and computing as a whole. It's a field that marries the elegance of neurobiology with the precision of engineering, promising to bring us one step closer to creating truly intelligent machines.

As I watch my nephew grow and learn, marveling at the incredible capabilities of his developing brain, I can't help but wonder: will the neuromorphic computers of tomorrow learn and grow in similar ways? Only time will tell. But one thing's for certain - the future of computing is looking decidedly more brain-like, and I, for one, can't wait to see where this neural journey takes us.

References

- https://aimodels.org/neuromorphic-computing/ethical-considerations-neuromorphic-computing-bias-fairness-privacy-security/

- https://en.wikichip.org/wiki/intel/loihi

- https://research.ibm.com/publications/truenorth-design-and-tool-flow-of-a-65-mw-1-million-neuron-programmable-neurosynaptic-chip

- https://builtin.com/artificial-intelligence/neuromorphic-computing

- https://www.geeksforgeeks.org/neuromorphic-computing/

- https://www.intel.com/content/www/us/en/newsroom/news/intel-builds-worlds-largest-neuromorphic-system.html

- https://hdsr.mitpress.mit.edu/pub/wi9yky5c/release/3

- https://www.nature.com/articles/s43588-021-00184-y

- https://www.ibm.com/think/topics/neuromorphic-computing

- https://www.techtarget.com/searchenterpriseai/definition/neuromorphic-computing

The Rise of Edge AI: Bringing Intelligence to IoT Devices

Comments

No comments yet. Be the first to comment!

Leave a Comment